Computer Vision and Projection Mapping in Python

Map Between What the Camera Sees and What the Projector Projects

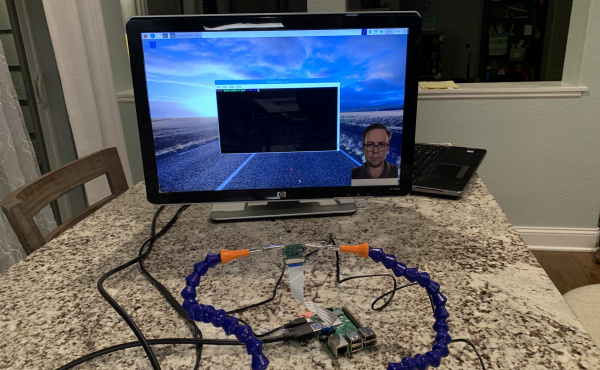

Now that we've found what we're looking for (in this case faces), it's time to build the image we want to project back into the world. If you think about it, we don't want to project the image captured from the camera back out into the world. If you were standing in front of the camera and projector, you wouldn't want the image of your own face projected back on top of it in addition to the sprite we will add. To overcome this, we will create a black image that matches our projector resolution that we will add our sprite into. This is where using a monitor instead of a projector changes our setup a little.

If you'd like to continue using a monitor, I'd suggest cutting out a small printout of a head and taping it to something like a Popsicle stick. As you move the head around in front of the monitor, our project should move the sprite around behind it.

One of the last pieces we need to tackle is mapping from the pixel positions in our captured image to the pixel positions in our image that we will project.

Where we need pixel values, I used the numpy function interp():

language:python

for index, (x, y) in enumerate(shape):

# We need to map our points to the new image from the original

new_x = int(np.interp(x, [0, f_width], [0, d_width]))

new_y = int(np.interp(y, [0, f_height], [0, d_height]))

where shape is a list of our facial feature points in (x, y) format, f_width and f_height are the captured image width and height, and d_width and d_height are our display width and height.

Other places I just multiplied by the a scale ratio:

language:python

face_width = int(mark.width() * (d_width/f_width))

where mark is a detected face object provided by dlib, and d_width and f_width are the same as above.

We want to avoid fractional pixels, so it's good to cast our interpolated points to an int() so they have no decimal points.